Published on: January 5, 2026

6 min read

Building trust in agentic tools: What we learned from our users

Discover how trust in AI agents is developed from small, positive micro-inflection points, not big breakthroughs.

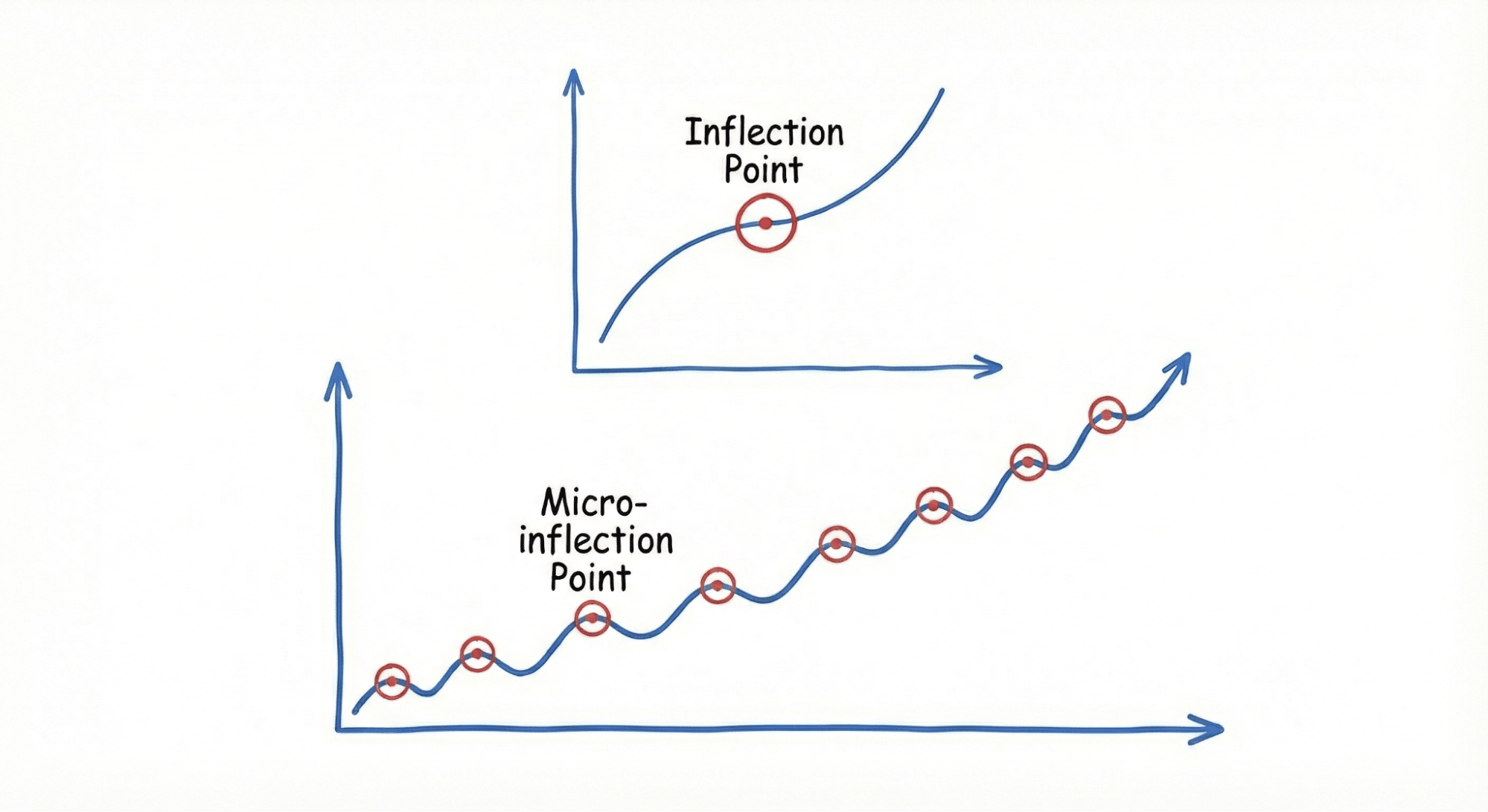

As AI agents become increasingly sophisticated partners in software development, a critical question emerges: How do we build lasting trust between humans and these autonomous systems? Recent research from GitLab's UX Research team reveals that trust in AI agents isn't built through dramatic breakthroughs, but rather through countless small interactions called inflection points that accumulate over time to create confidence and reliability.

Our comprehensive study of 13 agentic tool users from companies of different sizes identified that adoption happens through "micro-inflection points," subtle design choices and interaction patterns that gradually build the trust needed for developers to rely on AI agents in their daily workflows. These findings offer crucial insights for organizations implementing AI agents in their DevSecOps processes.

Traditional software tools earn trust through predictable behavior and consistent performance. AI agents, however, operate with a degree of autonomy that introduces uncertainty. Our research shows that users don't commit to AI tools through single "aha" moments. Instead, they develop trust through accumulated positive micro-interactions that demonstrate the agent understands their context, respects their guardrails, and enhances rather than disrupts their workflows.

This incremental trust-building is especially critical in DevSecOps environments where mistakes can impact production systems, customer data, and business operations. Each small interaction either reinforces or erodes the foundation of trust necessary for productive human-AI collaboration.

Four pillars of trust in AI agents

Our research identified four key categories of micro-inflection points that build user trust:

- Safeguarding actions

Trust begins with safety. Users need confidence that AI agents won't cause irreversible damage to their systems. Essential safeguards include:

- Confirmation dialogs for critical changes: Before executing operations that could affect production systems or delete data, agents should pause and seek explicit approval

- Rollback capabilities: Users must know they can undo agent actions if something goes wrong

- Secure boundaries: For organizations with compliance requirements, agents must respect data residency and security policies without constant manual oversight

- Providing transparency

Users can't trust what they can't understand. Effective AI agents maintain visibility through:

- Real-time progress updates: Especially crucial when user attention might be needed

- Action explanations: Before executing high-stakes operations, agents should clearly communicate their planned approach

- Clear error handling: When issues arise, users need immediate alerts with understandable error messages and recovery paths

This transparency transforms AI agents from mysterious black boxes into comprehensible partners whose logic users can follow and verify.

- Remembering context

Nothing erodes trust faster than having to repeatedly teach an AI agent the same information. Trust-building agents demonstrate memory through:

- Preference retention: Accepting and applying user feedback about coding styles, deployment patterns, or workflow preferences

- Context awareness: Remembering previous instructions and project-specific requirements

- Adaptive learning: Evolving based on user corrections without requiring explicit reprogramming

Our research participants consistently highlighted frustration with tools that couldn't remember basic preferences, forcing them to provide the same guidance repeatedly.

- Anticipating needs

Trust emerges when AI agents proactively support user workflows. Agents could support the user in the following ways:

- Pattern recognition: Learning user routines and predicting tasks based on time of day or project context

- Intelligent agent selection: Automatically recognizing which specialized agents are most relevant for specific tasks

- Environment analysis: Understanding coding environments, dependencies, and project structures without explicit configuration

These anticipatory capabilities transform AI agents from reactive tools into proactive partners that reduce cognitive load and streamline development processes.

Implementing trust-building features

For organizations deploying AI agents, our research suggests several practical implementations:

- Start with low-risk environments: Allow users to build trust gradually by beginning with non-critical tasks. As confidence grows through positive micro-interactions, users naturally expand their reliance on AI capabilities.

- Design for continuous orchestration of agents, which includes intervention: Unlike traditional automation, AI agents should know when to pause and seek human input. This intervention assures users they maintain ultimate control while benefiting from AI efficiency. Agents also need autonomy level controls so that they can calibrate autonomy for different types of action, in different contexts.

- Maintain audit trails: Every agent action should be traceable, allowing users to understand not just what happened, but why the agent made specific decisions.

- Personalize the experience: Agents that adapt to individual user preferences and team workflows create stronger trust bonds than one-size-fits-all solutions.

The compounding impact of trust

Our findings reveal that trust in AI agents follows a compound growth pattern. Each positive micro-interaction makes users slightly more willing to rely on the agent for the next task. Over time, these small trust deposits accumulate into deep confidence that transforms AI agents from experimental tools into essential development partners.

This trust-building process is delicate – a single significant failure can erase weeks of accumulated confidence. That's why consistency in these micro-inflection points is crucial. Every interaction matters.

Supporting these micro-inflection points is a cornerstone of having software teams and their AI agents collaborate at enterprise scale with intelligent orchestration.

Next steps

Building trust in AI agents requires intentional design focused on user needs and concerns.

Organizations implementing agentic tools should:

- Audit their AI agents for trust-building micro-interactions

- Prioritize transparency and user control in agent design

- Invest in memory and learning capabilities that reduce user friction

- Create clear escalation paths for when agents encounter uncertainty

Key takeaways

- Trust in AI agents builds incrementally through micro-inflection points rather than breakthrough moments

- Four key categories drive trust: safeguarding actions, providing transparency, remembering context, and anticipating needs

- Small design choices in AI interactions have compound effects on user adoption and long-term reliance

- Organizations must intentionally design for trust through consistent, positive micro-interactions

Help us learn what matters to you: Your experiences and insights are invaluable in shaping how we design and improve agentic interactions. Join our research panel to participate in upcoming studies.

Explore GitLab’s agents in action: GitLab Duo Agent Platform extends AI's speed beyond just coding to your entire software lifecycle. With your workflows defining the rules, your context maintaining organizational knowledge, and your guardrails ensuring control, teams can orchestrate while agents execute across the SDLC. Visit the GitLab Duo Agent Platform site to discover how intelligent orchestration can transform your DevSecOps journey.

Whether you're exploring agents for the first time or looking to optimize your existing implementations, we believe that understanding and designing for trust is the key to successful adoption. Let's build that future together!

We want to hear from you

Enjoyed reading this blog post or have questions or feedback? Share your thoughts by creating a new topic in the GitLab community forum.

Share your feedback